NFV: From CI/CD to Hypervisor or Container Based Deployments

Marie-Paule Odini, HPE

With NFV, Network Function Virtualization, the whole process starts with the design of the Virtualized Network Function (VNF). This is generally done by a supplier of the VNF, typically a vendor. This VNF is then sold to a service provider who will integrate this VNF in his environment to enhance or deploy a new service.

This process takes several steps, both on the supplier side and then on the service provider side, before, during and after deployment. It is more and more automated with closed loop and iterations to ensure best in class services, no service downtime, and constant feature enhancements of the service environment to remain competitive.

CI/CD: Continuous Integration/Continuous Delivery

Devops, Delivery and Operations, or CI/CD Continuous Integration/Continuous Delivery are terms now commonly used in software development with agile methods using iterative models and more and more automated and collaborative tools.

Continuous Integration is typically used during the “development” process of a software using a set of tools such as coding environment, library access, interpreter/compiler, tools to combine different piece of code, build, testing environment with programmatic access and smooth integration between each component, allowing collaboration and iterative process until test results allow to move to next steps which is Continuous Delivery.

Continuous Delivery is the process that delivers a piece of software to a production environment. It takes the software and a set of metadata that describes the environment required to execute this software, the dependencies, maybe some test routines, expected test results, some test scripts from Continuous Integration and pushes this into a system that will combine this code with other pieces of code that will constitute the service or a bigger component of service. The continuous Delivery will go through some configuration of the environment, the upload of the software to the production environment, the configuration of the software, some tests, and few iterations until the deployment tests results are satisfactory. The next phase will be operation of the live service.

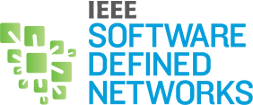

In an NFV environment, where VNF are provided by a set of suppliers, to a service provider that generally assembles the different VNF to deliver a service, the different steps are generally:

- On the supplier side:

- Develop VNF

- Test VNF

- On the Service Provider side:

- Stage VNF

- Deploy VNF

- Operate the VNF as part of the live service

At each stage in the process, a different set of tools is used to perform different tasks, and a VNF package is passed with the application executable code (image) and some artifacts necessary for the next step.

Typically from Develop to Test, the image is passed with a VNF Descriptor that describes the characteristics of the resources needed for that VNF to run, maybe a VNF Manager or some lifecycle management scripts, maybe a config file to configuration the VNF for the tests: this constitutes VNF Package #1.

From Test to Stage: VNF Package #2 may be very similar to VNF Package #1 but include some test scripts and expected test results.

Each step can be used to support the refinement of both functional and non-functional aspects (such as performance, resource consumption and security). Early steps ensure the functional completeness, middle stages permit the profiling of performance and assessment of security. Latter stages take these characterized VNFs and specialize them to the service provider’s environment, such as SDN support requirements specific to the service provider and how the resulting SDN/NFV components would interact with the suppliers’ system integrity and fault recovery processes for instance.

At each step, the VNF package and embedded test artifacts are tuned to include results from that latest step and prepare for the next one. CI/CD and VNF virtualization.

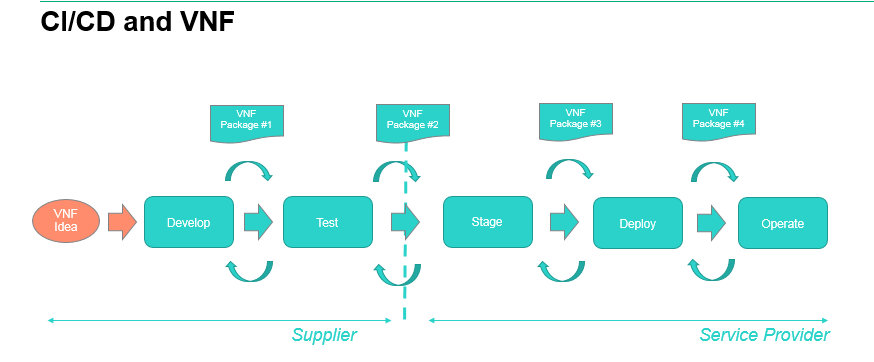

As defined by ETSI NFV SWA specifications, a VNF is typically a set of VNFC (VNF Component). Each VNFC being deployed on a virtualization container. ETSI NFV is agnostic of the virtualization container being used: hypervisor based or container such as Linux container or Docker for instance.

Then multiple combination exist:

- a) A given supplier may only deliver VNF hypervisor based

- b) a given supplier may deliver VNF Docker based

- b) A given supplier may deliver a mix of hypervisor based VNF and Docker based VNF

- c) A given supplier may deliver hybrid VNF: some VNFC are Docker based, some hypervisor based

- All suppliers may be of model a) or model b) , c) or d)

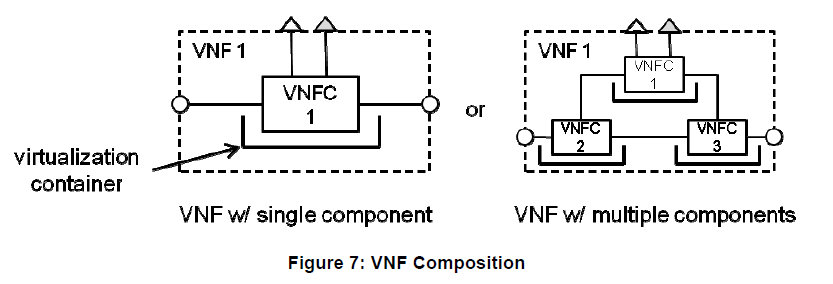

Similarly a service provider may decide to only deploy hypervisor based VNF, or only Docker based VNF, or support a mix of both. Whether direct or indirect mode is being used, the VNFM or the NFVO would then need to pass either an image with Guest OS for hypervisor based VM, or an image without Guest OS for containers like Docker.

A 4th case which is not described here is to support hybrid VNF with a mix of hypervisor based and Docker based VNFC.

The particular function embedded within a given NFV deployment can have many ways in which it could be eventually deployed. The choice of which deployment option is the most suited to a particular service provider’s environment will depend on not just the functional, but the non-functional aspects such as performance and security. Different virtualization options offer different levels of cost, performance along with different security integrity tradeoffs.

Although this short article has presented the process in a linear step-by-step deployment model, the potential choice of virtualization containers may lead to multiple iterations around the later steps as different virtualization choices are made in response to cost, performance and other operational concerns.

Consequently not only the supplier needs to deliver the proper VNF image to run either on the proper hypervisor versions supported, or container OS version supported, but it also needs to deliver the proper VNF Managers or VNF Manager scripts and artefacts to be compatible with the Service Provider MANO and NFVI environment. ETSI NFV supports these different models, then it is up to the implementation of the NFVO and the VIM to either adopt an implementation with a single VIM that supports both hypervisor and containers (ex OpenStack with Docker plug in), or have 2 separate VIMs (ex OpenStack for hypervisor and Kubernetes for Docker).

Based on these different combinations and what the service provider decides to support on his NFVI (NFV Infrastructure), the CI/CD will have to adapt and support more or less combinations and co-existence of different tools, packaging and interface implementations.

Marie-Paule Odini holds a master's degree in electrical engineering from Utah State University. Her experience in telecom experience including voice and data. After managing the HP worldwide VoIP program, HP wireless LAN program and HP Service Delivery program, she is now HP CMS CTO for EMEA and also a Distinguished Technologist, NFV, SDN at Hewlett-Packard. Since joining HP in 1987, Odini has held positions in technical consulting, sales development and marketing within different HP organizations in France and the U.S. All of her roles have focused on networking or the service provider business, either in solutions for the network infrastructure or for the operation.

Marie-Paule Odini holds a master's degree in electrical engineering from Utah State University. Her experience in telecom experience including voice and data. After managing the HP worldwide VoIP program, HP wireless LAN program and HP Service Delivery program, she is now HP CMS CTO for EMEA and also a Distinguished Technologist, NFV, SDN at Hewlett-Packard. Since joining HP in 1987, Odini has held positions in technical consulting, sales development and marketing within different HP organizations in France and the U.S. All of her roles have focused on networking or the service provider business, either in solutions for the network infrastructure or for the operation.

Editor:

Neil Davies is an expert in resolving the practical and theoretical challenges of large scale distributed and high-performance computing. He is a computer scientist, mathematician and hands-on software developer who builds both rigorously engineered working systems and scalable demonstrators of new computing and networking concepts. His interests center around scalability effects in large distributed systems, their operational quality, and how to manage their degradation gracefully under saturation and in adverse operational conditions. This has lead to recent work with Ofcom on scalability and traffic management in national infrastructures.

Neil Davies is an expert in resolving the practical and theoretical challenges of large scale distributed and high-performance computing. He is a computer scientist, mathematician and hands-on software developer who builds both rigorously engineered working systems and scalable demonstrators of new computing and networking concepts. His interests center around scalability effects in large distributed systems, their operational quality, and how to manage their degradation gracefully under saturation and in adverse operational conditions. This has lead to recent work with Ofcom on scalability and traffic management in national infrastructures.

Throughout his 20-year career at the University of Bristol he was involved with early developments in networking, its protocols and their implementations. During this time he collaborated with organizations such as NATS, Nuclear Electric, HSE, ST Microelectronics and CERN on issues relating to scalable performance and operational safety. He was also technical lead on several large EU Framework collaborations relating to high performance switching. Mentoring PhD candidates is a particular interest; Neil has worked with CERN students on the performance aspects of data acquisition for the ATLAS experiment, and has ongoing collaborative relationships with other institutions.

Subscribe to IEEE Softwarization

Join our free SDN Technical Community and receive IEEE Softwarization.

Article Contributions Welcomed

Download IEEE Softwarization Editorial Guidelines for Authors (PDF, 122 KB)

If you wish to have an article considered for publication, please contact the Managing Editor at sdn-editor@ieee.org.

Past Issues

IEEE Softwarization Editorial Board

Laurent Ciavaglia, Editor-in-Chief

Mohamed Faten Zhani, Managing Editor

TBD, Deputy Managing Editor

Syed Hassan Ahmed

Dr. J. Amudhavel

Francesco Benedetto

Korhan Cengiz

Noel Crespi

Neil Davies

Eliezer Dekel

Eileen Healy

Chris Hrivnak

Atta ur Rehman Khan

Marie-Paule Odini

Shashikant Patil

Kostas Pentikousis

Luca Prete

Muhammad Maaz Rehan

Mubashir Rehmani

Stefano Salsano

Elio Salvadori

Nadir Shah

Alexandros Stavdas

Jose Verger