Transient Performance: Scoping the Challenges for SDN

Transient Performance: Scoping the Challenges for SDN

Neil Davies and Peter Thompson, Predictable Network Solutions Ltd.

IEEE Softwarization, July 2017

The delivered real-time performance of networks is becoming ever more important, especially as applications increasingly become interacting collections of micro-services, dependent on each other and the connectivity between them. Typical network performance measures such as speed tests or MRTG reports represent only averages over periods that are very long compared to the response times of such services. The assumption that such averages provide a reliable guide to the performance over much shorter intervals is just that: an assumption, and one that becomes increasingly questionable as new technologies such as SDN introduce rapid and frequent network reconfigurations.

In our earlier article “Performance Contracts in SDN Systems” we presented ‘network performance’ as the absence of ‘quality impairment’ (in the same way that a ‘high-performance’ analog connection is one that introduces minimal noise and distortion). We called this impairment ‘∆Q’. It has several sources, including the time for signals to travel between the source and destination and the time taken to serialize and deserialize the information. In packet-based networks, statistical multiplexing is an additional source of impairment, making ∆Q an inherently statistical measure. It can be thought of as either the probability distribution of what might happen to a packet transmitted at a particular moment from source A to destination B or as the statistical properties of a stream of such packets. ∆Q captures both the effects of the network’s structure and extent, and the additional impairment due to statistical multiplexing.

As discussed in the previous article, whether an application delivers fit-for-purpose outcomes depends entirely on the ‘magnitude’ of ∆Q and the application’s sensitivity to it. The outcome of statistical multiplexing is affected by several factors, of which load is one; since capacity is finite, no network can offer bounded impairment to an unbounded applied load. What an application really requires from the network is the transfer of an amount of information with a bounded impairment, depending on what the application can tolerate. We previously presented a formal representation of such a requirement, called a ‘Quantitative Timeliness Agreement’ or ‘QTA’, which provides a way for an application and a network to ‘negotiate’ performance. In effect, the application ‘agrees’ to limit its applied load in return for a ‘promise’ from the network to transport it with suitably bounded impairment.

As SDN paths are composed of routes built upon hop-by-hop connections, each of which will introduce some ‘quality impairment’ (∆Q), truly effective orchestration requires a comprehensive understanding of how quality impairment accrues along such paths. This is a prerequisite to being able to budget and plan to deliver appropriately bounded quality impairment, which underpins fit-for-purpose end-to-end network transport.

Measurement and Decomposition of ∆Q

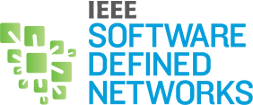

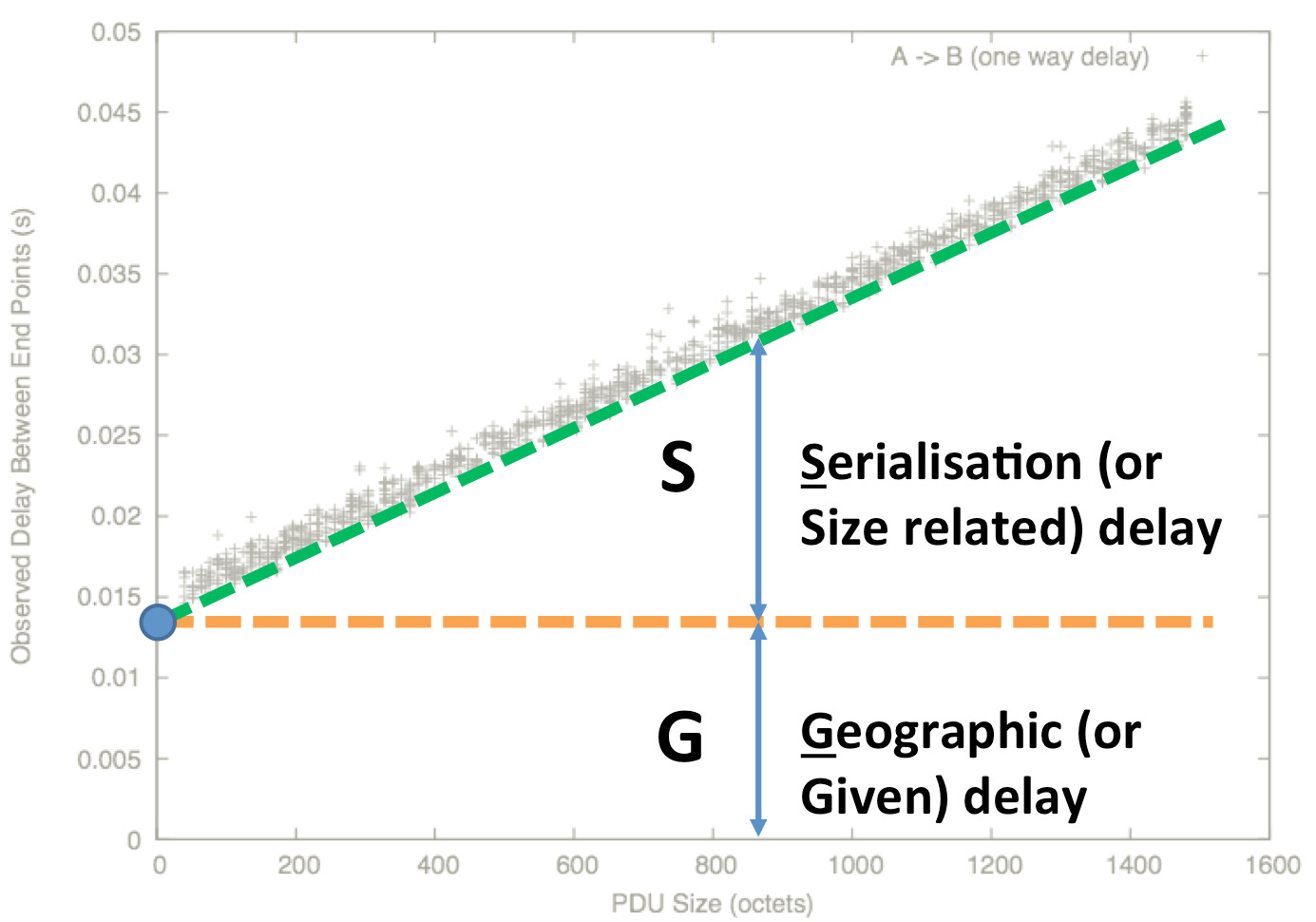

∆Q can be estimated by measuring the point-to-point delays of a sequence of packets. A typical experimental measurement of ∆Q looks like the figure above: a series of packets are sent from A to B, and the time taken for each one is measured; different packets experience different delays, but it’s unclear what sense to make of this data.

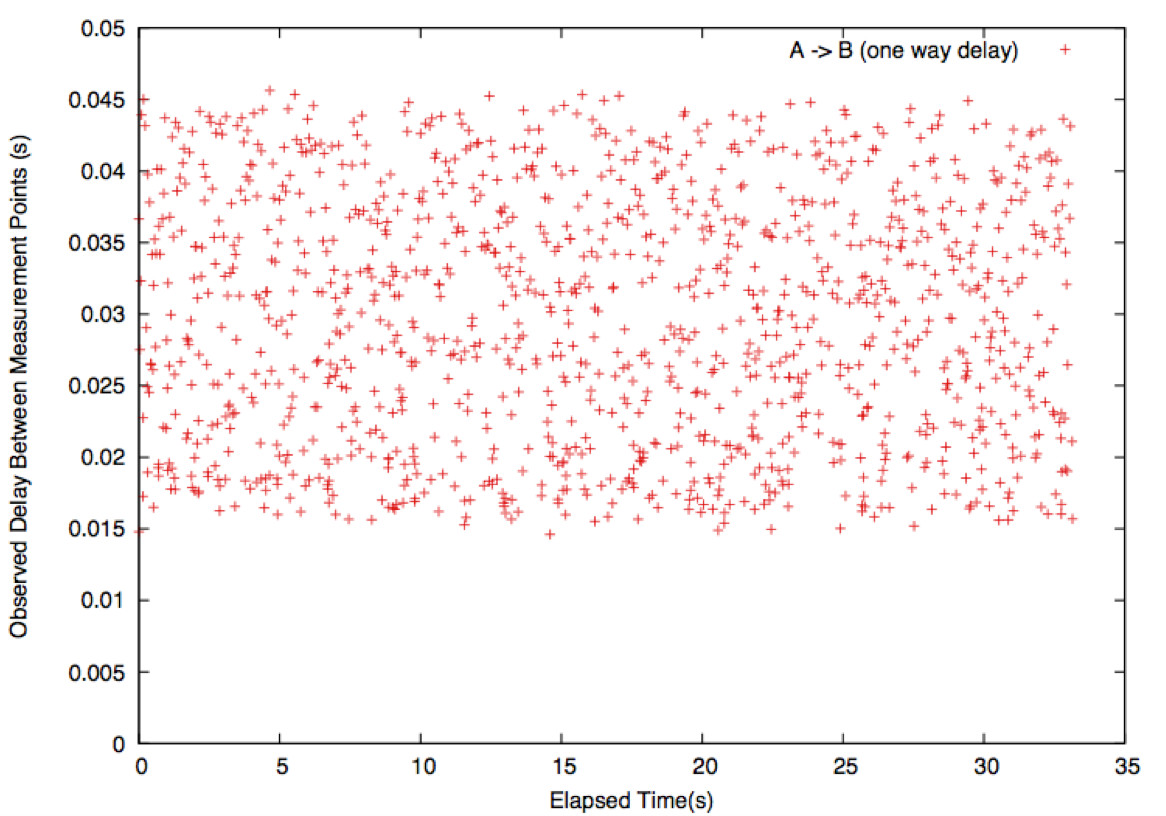

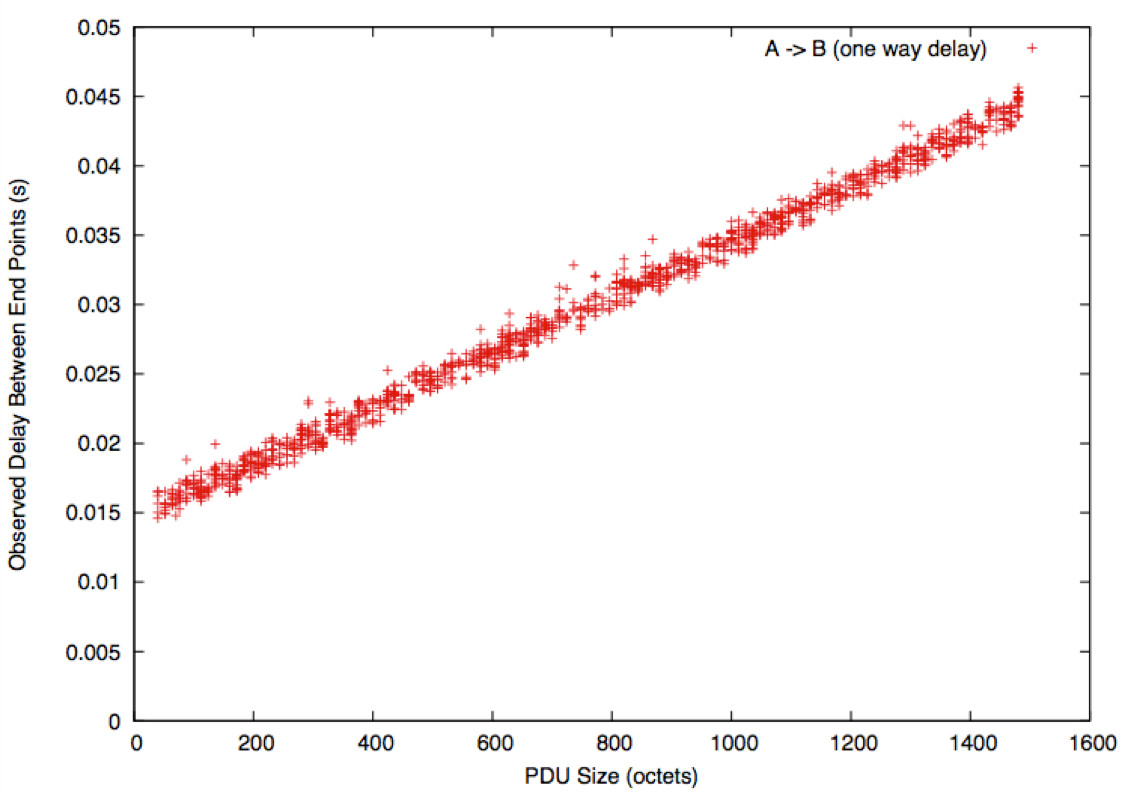

One reason for the variability is that the packets are of different sizes. The figure above shows the same data sorted by packet size; structure now begins to emerge.

There is a boundary line. Packets on the line experienced a network where all buffers were empty; those above had to wait for other traffic in buffers.

Extrapolating this line to a ‘zero size’ packet gives a measure of the minimum delay: this is called ∆Q|G, or just ‘G’. This incorporates:

- Geographical factors (~ distance/c);

- Media access effects, particularly when resources are shared (such as with WiFi and 3G);

- Per-packet overheads such as router lookups.

It represents the irreducible cost of transporting a SDU (service data unit). Note that this may, in itself, be a probability distribution (and usually is).

Packets with bigger payloads experience more structural delay: it takes longer to turn the packet into a bitstream, and back again into a packet at the next network element. This is

called ∆Q|S, or just ‘S’, and is a function from packet size to delay[1].

The remainder of the delay is not structural, but is induced by offering a non-zero load to the shared transmission supply. This remaining delay is called ∆Q|V, or just ‘V’; network elements have choices over how to allocate this delay.

Each of those components could also contribute to loss.

All ∆Q is (everywhere and always) comprised of these three basic elements. G, S, and V form a set of ‘basis vectors’ for the overall ∆Q. It is important to remember that it is this overall ∆Q that determines application outcomes; but for understanding how the network gives rise to ∆Q, the decomposition into G, S and V is very helpful.

Variability of Network Performance

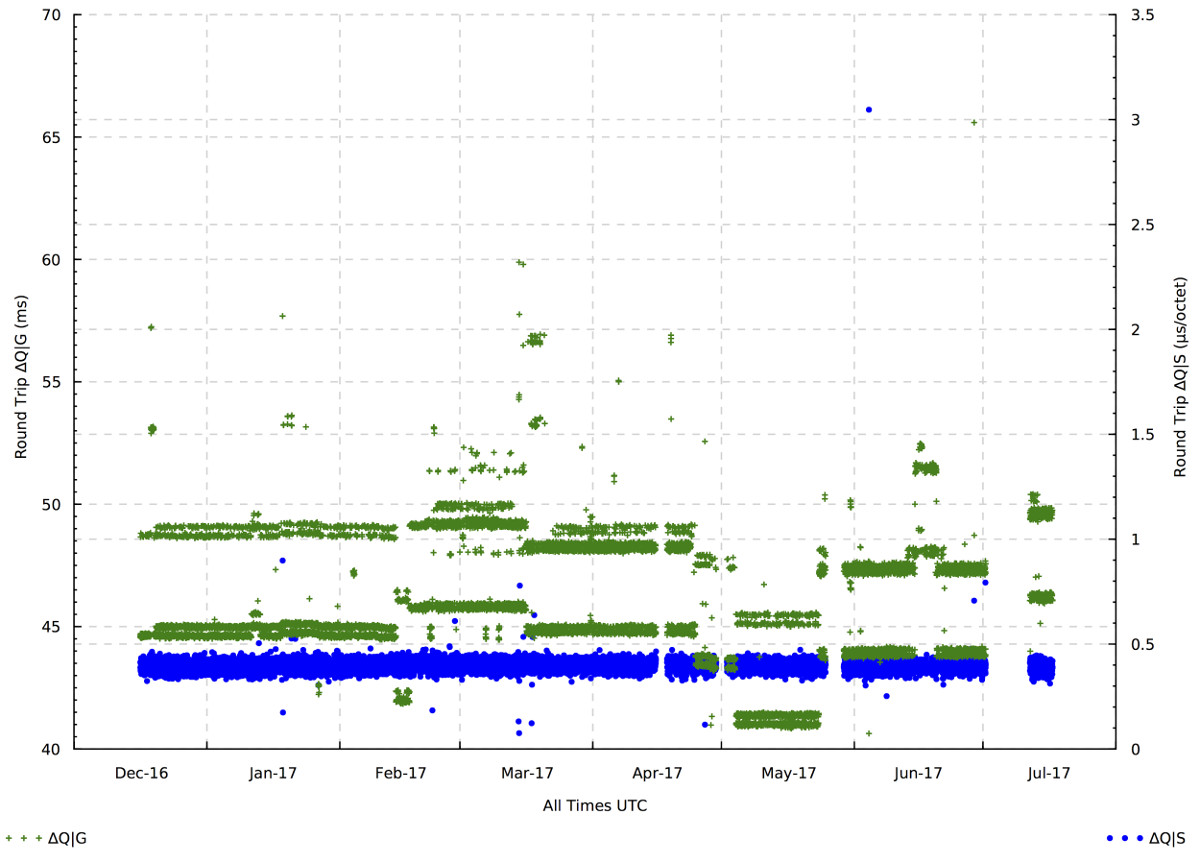

Repeated measurements of ∆Q over the same path show striking patterns of variability.

The diagram above shows the G and S components of the measured ∆Q for the round-trip between an endpoint connected to a GPON access network and a server in a datacenter 1600km away. Measurements were taken three times per hour; S is mostly constant, but G shows interesting variations. The absolute minimum round-trip time determined by the speed of light is 11ms; however, light travels more slowly in glass than in space; fibers do not follow the shortest possible path between two arbitrarily chosen points; and packets must pass through intermediate switches and routers, so a minimum measured G of 42ms is entirely reasonable. What is notable is how G varies between one measurement and the next, typically by up to 10ms, presumably due to routing choices.

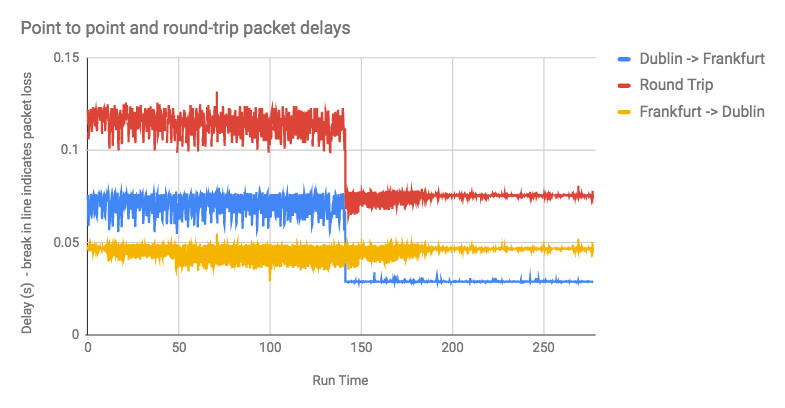

When such choices are made on a connection-by-connection basis they are unlikely to be problematic, however the next diagram shows a sudden shift in delay during the course of a single measurement:

Each point shows the delay for an individual packet between data centers in Dublin and Frankfurt (approximately 1000km apart, a 6ms round-trip as the photon flies). It can be seen that the base delay (∆Q|G) suddenly drops by about 40ms, again presumably because of a routing change, which could be problematic for many applications. Ongoing measurement of this route shows that changes like this (though not often of this magnitude) occur several times per week.

Challenge for SDN

Softwarization of networks makes changes of configuration, for example bearer and routing options, much easier to perform, and hence will probably increase their frequency substantially. It is tempting to assume that, provided connectivity is maintained and capacity is sufficient, such changes can be made freely and without consequences. The examples above show that, even in the current, largely static situation, there are substantial variations and sharp transients in performance that could have significant impacts on real-time and highly interactive services.

The challenge for the SDN ecosystem is to better understand and model these dynamics of network performance. Given that individual route choices can cause application-affecting changes in delivered quality impairment, issues of both consistency and absolute value arise, particularly as an application may open up multiple connections to a single peer. As we have shown above, quality impairment can change hour-by-hour or even second-by-second in existing (non-SDN) solutions. How can SDN rise to the challenge of delivering better and more consistent services than we have today, with better control over the delivered quality impairment and hence more satisfactory application and customer experience?

[1] ∆Q|S is not necessarily a straight line: it may have a more complex structure depending on media quantisation (e.g. ATM cells, WiFi) and bearer allocation choices (e.g. 3GPP).

Neil Davies is an expert in resolving the practical and theoretical challenges of large scale distributed and high-performance computing. He is a computer scientist, mathematician and hands-on software developer who builds both rigorously engineered working systems and scalable demonstrators of new computing and networking concepts. His interests center around scalability effects in large distributed systems, their operational quality, and how to manage their degradation gracefully under saturation and in adverse operational conditions. This has lead to recent work with Ofcom on scalability and traffic management in national infrastructures.

Neil Davies is an expert in resolving the practical and theoretical challenges of large scale distributed and high-performance computing. He is a computer scientist, mathematician and hands-on software developer who builds both rigorously engineered working systems and scalable demonstrators of new computing and networking concepts. His interests center around scalability effects in large distributed systems, their operational quality, and how to manage their degradation gracefully under saturation and in adverse operational conditions. This has lead to recent work with Ofcom on scalability and traffic management in national infrastructures.

Throughout his 20-year career at the University of Bristol he was involved with early developments in networking, its protocols and their implementations. During this time he collaborated with organizations such as NATS, Nuclear Electric, HSE, ST Microelectronics and CERN on issues relating to scalable performance and operational safety. He was also technical lead on several large EU Framework collaborations relating to high performance switching. Mentoring PhD candidates is a particular interest; Neil has worked with CERN students on the performance aspects of data acquisition for the ATLAS experiment, and has ongoing collaborative relationships with other institutions.

Peter Thompson became Chief Technical Officer of Predictable Network Solutions in 2012 after several years as Chief Scientist of GoS Networks (formerly U4EA Technologies). Prior to that he was CEO and one of the founders (together with Neil Davies) of Degree2 Innovations, a company established to commercialize advanced research into network QoS/QoE, undertaken during four years that he was a Senior Research Fellow at the Partnership in Advanced Computing Technology in Bristol, England. Previously he spent eleven years at STMicroelectronics (formerly INMOS), where one of his numerous patents for parallel computing and communications received a corporate World-wide Technical Achievement Award. For five years he was the Subject Editor for VLSI and Architectures of the journal Microprocessors and Microsystems, published by Elsevier. He has degrees in mathematics and physics from the Universities of Warwick and Cambridge, and spent five years doing research in general relativity and quantum theory at the University of Oxford.

Peter Thompson became Chief Technical Officer of Predictable Network Solutions in 2012 after several years as Chief Scientist of GoS Networks (formerly U4EA Technologies). Prior to that he was CEO and one of the founders (together with Neil Davies) of Degree2 Innovations, a company established to commercialize advanced research into network QoS/QoE, undertaken during four years that he was a Senior Research Fellow at the Partnership in Advanced Computing Technology in Bristol, England. Previously he spent eleven years at STMicroelectronics (formerly INMOS), where one of his numerous patents for parallel computing and communications received a corporate World-wide Technical Achievement Award. For five years he was the Subject Editor for VLSI and Architectures of the journal Microprocessors and Microsystems, published by Elsevier. He has degrees in mathematics and physics from the Universities of Warwick and Cambridge, and spent five years doing research in general relativity and quantum theory at the University of Oxford.

Editor:

Laurent Ciavaglia is currently senior research manager at Nokia Bell Labs where he coordinates a team specialized in autonomic and distributed systems management, inventing future network management solutions based on artificial intelligence.

Laurent Ciavaglia is currently senior research manager at Nokia Bell Labs where he coordinates a team specialized in autonomic and distributed systems management, inventing future network management solutions based on artificial intelligence.

In recent years, Laurent led the European research project UNIVERSELF (www.univerself-project.eu) developing a unified management framework for autonomic network functions. , has worked on the design, specification and evaluation of carrier-grade networks including several European research projects dealing with network control and management.

As part of his activities in standardization, Laurent participates in several working groups of the IETF OPS area and is co-chair of the Network Management Research Group (NRMG) of the IRTF, member of the Internet Research Steering Group (IRSG). Previously, Laurent was also vice-chair of the ETSI Industry Specification Group on Autonomics for Future Internet (AFI), working on the definition of standards for self-managing networks.

Laurent has co-authored more than 80 publications and holds 35 patents in the field of communication systems. Laurent also acts as member of the technical committee of several IEEE, ACM and IFIP conferences and workshops, and as reviewers of referenced international journals, and magazines.

Towards the World Brain with SDN & NFV

Towards the World Brain with SDN & NFV

Chris Hrivnak, The Nemacolin Group

IEEE Softwarization, July 2017

In early January 2017, in Las Vegas at their World Developer Summit, AT&T executive John Stankey announced AT&T is no longer "the phone company," but rather "the open-source software-defined networks company." What a change!

Stankey went on to say the company has enabled 34% of its networks with software capabilities and will continue the trend. In order to more fully transition its legacy networks, AT&T is acquiring Vyatta (network operating systems with VNFs such as vRouter, distributed services platform and new unreleased software) from Brocade this year. Last year, AT&T introduced ECOMP -- for Enhanced Control, Orchestration, Management & Policy.

In collaboration with Intel Labs, Sprint too is shifting from proprietary equipment and software control to open-source software and off-the-shelf data center hardware with C3PO (Clean Control & User Plane Separation (CUPS) Core Packet Optimization (acronym within an acronym).

Just a few years ago, in October 2012, ETSI (European Telecommunications Standards Institute) had spun out an introductory white paper from operators on Network Functions Virtualization (NFV), which is complementary to SDN. ETSI also has the Open Source NFV MANO (Management and Network Orchestration) software framework.

This year on the Gartner Hype Cycle, SDN and NFV can be found on the slope of enlightenment and the plateau of productivity.

Grand forecasts of world market growth and size have been released, for instance:

"Between 2015 and 2020, the service provider NFV market will grow at a robust compound annual growth rate (CAGR) of 42 percent --- from $2.7 billion in 2015 to $15.5 billion in 2020," said Michael Howard, Senior Research Director, Carrier Networks, at IHS Markit.

That market would be for NFV hardware, software and services.

There are many SDN/NFV tech conferences going on with increasing concerns about fitting the softwares to business models, total cost of ownership (TCO) analyses and ramping up for the IoT, LTE-M and eventually 5G networks that are likely to be user-centric with on-demand dynamic delivery for service oriented architectures.

Google will continue to rely upon SDN and NFV in building its networking infrastructure to "NexGen" level to accommodate mobile devices in 5G. Their platform is described, in part, as being based on an SDN framework enabling networks to adapt to new services and traffic patterns. Characteristics include fast user space packet processing on commodity hardware, simplified workflow management and automated testing. Click here for more information.

Network maturity models or maps are being promulgated by Gartner, TMForum and others. For detail on the Intel model, click here. Intel envisages six phases of maturity with the present being focused on standalone functions and common information models and driving into the future with network slicing, federation and full service automation.

The rise of analytics and machine learning over cloud from Azure, Google, IBM, et al, via CPUs, Nvidia GPUs and TensorFlow processing units (TPUs) can be expected to lead to implementation of intelligent orchestration of network functions.

There will be driving benefits in terms of network security, telemetric processing, server infrastructures and other areas in the near- to mid-term. Productivity improvements such as double digit cost reductions in customer premise equipment (CPE) and service commissioning as well as new profitable revenue streams are expected as the market size and growth forecasts manifest.

Data moving through the cloud, fog and mobile edge between devices at increasing throughputs and decreasing latencies require network architectures structured with SDN and NFV, such as has already been established with Open Platform NFV (OPNFV) since 2014, so that agility, flexibility, interoperability and scalability are robust. In terms of interoperability, ETSI has come up with the Family of Common NFV APIs (Application Programming Interfaces) to enable a "modular and extensible framework for automated management and orchestration of VNFs and network service (NS) within an Interoperable Ecosystem (Source: Layer123)."

There remain intransigent security concerns with NFV. ETSI has organized a NFV Security Working Group, which has published releases from time to time. The conundrum is in providing robust security simultaneously with lawful interception. Click here for a summary of their tutorial that was held last month in the southern part of France.

These times are game changing for network operators and providers, whether they may be innovators, early adopters or laggards. Adding in future concerns of augmented, virtual and augmented realities, enterprise data visualization and supercomputing -- or even quantum computing -- over cloud, fog and edge leads further into the "world brain" concepts of Wells, Clark, Gaines, Goertzel, de Rosnay, Heylighen. For instance, the aggregation of image data from labs and other sources around the world will probably revolutionize scientific and engineering research and development.

Let's hope that geomagnetically induced currents (GICs) from solar flares (AKA coronal mass ejections) are limited in their effects on electrical grids!

Chris Hrivnak is a Sr. Member of the IEEE and The Photonics Society. He is also a member of the IEEE Life Sciences Community, the IEEE Software Defined Networks (SDN) Community and the IEEE Internet Technology Policy Community and has participated in the IEEE Experts in Technology and Policy (ETAP) Forum.. He graduated from Baldwin Wallace University and completed varied coursework at Cleveland State, Case Western Reserve, Gould Management Education Center, McKinsey, Hughes R&D Productivity, UT-Dallas, Tulane Law and Pepperdine Graziadio. Broad range of interests including but not limited to augmented intelligence, autonomous systems, additive manufacturing, information & communications technologies (ICT), life sciences, dark physics, etc.

Chris Hrivnak is a Sr. Member of the IEEE and The Photonics Society. He is also a member of the IEEE Life Sciences Community, the IEEE Software Defined Networks (SDN) Community and the IEEE Internet Technology Policy Community and has participated in the IEEE Experts in Technology and Policy (ETAP) Forum.. He graduated from Baldwin Wallace University and completed varied coursework at Cleveland State, Case Western Reserve, Gould Management Education Center, McKinsey, Hughes R&D Productivity, UT-Dallas, Tulane Law and Pepperdine Graziadio. Broad range of interests including but not limited to augmented intelligence, autonomous systems, additive manufacturing, information & communications technologies (ICT), life sciences, dark physics, etc.

Editor:

Assistant Prof. Dr. Korhan Cengiz is a senior lecturer in the department of electrical-electronics engineering in Trakya University, Turkey. His research interests include wireless sensor networks, SDN, 5G and spatial modulation.

Assistant Prof. Dr. Korhan Cengiz is a senior lecturer in the department of electrical-electronics engineering in Trakya University, Turkey. His research interests include wireless sensor networks, SDN, 5G and spatial modulation.

IEEE Softwarization - July 2017

IEEE Softwarization - July 2017

A collection of short technical articles

Overview of NetSoft 2017 Best Paper: “Catena: A Distributed Architecture for Robust Service Function Chain Instantiation with Guarantees”

By Flavio Esposito, Saint Louis University, USA

To offer wide-area distributed virtual network services, resources from multiple end-users and federated or geo-distributed) providers need to be managed. Such virtual resource management is, however, very complex, given the scale and constraints required by various applications. Moreover, multiple virtual network functions need to coexist in support of service function chains. For example, in an edge computing scenario supporting first responders in case of a natural or man-made disaster, or to support remote data-intensive edge processing for medical applications, service functions involving edge clouds (i.e. edge functions) are needed. Edge functions may range from management plane mechanisms, such as load balancing, firewalls, data acceleration, intrusion detection, network address translators or deep-packet inspections to data plane mechanisms, such as congestion control and network scheduling.

Overview of NetSoft 2017 Paper: “X–MANO: Cross–domain Management and Orchestration of Network Services”

By Antonio Francescon, FBK CREATE-NET, Italy; Giovanni Baggio, FBK CREATE-NET, Italy; Riccardo Fedrizzi, FBK CREATE-NET, Italy; Ramon Ferrus, UPC, Spain; Imen Grida Ben Yahia, Orange Labs, France; and Roberto Riggio, FBK CREATE-NET, Italy

The orchestration of network services is a well investigated problem. Standards and recommendation have been produced by ETSI and IETF while a significant body of scientific literature can be found exploring both the theoretical and practical aspects of the problem. Likewise several open–source as well as proprietary tools for network service orchestration are already available. Nevertheless, in most of these cases network services can only be provisioned across a single administrative domain effectively preventing end–to–end network service delivery across multiple Infrastructure Providers (InP).

Transient Performance: Scoping the Challenges for SDN

By Neil Davies and Peter Thompson, Predictable Network Solutions Ltd.

The delivered real-time performance of networks is becoming ever more important, especially as applications increasingly become interacting collections of micro-services, dependent on each other and the connectivity between them. Typical network performance measures such as speed tests or MRTG reports represent only averages over periods that are very long compared to the response times of such services. The assumption that such averages provide a reliable guide to the performance over much shorter intervals is just that: an assumption, and one that becomes increasingly questionable as new technologies such as SDN introduce rapid and frequent network reconfigurations.

Towards the World Brain with SDN & NFV

By Chris Hrivnak, The Nemacolin Group

In early January 2017, in Las Vegas at their World Developer Summit, AT&T executive John Stankey announced AT&T is no longer "the phone company," but rather "the open-source software-defined networks company." What a change!

Overview of IEEE NetSoft Conference 2017 Best Paper: “Catena: A Distributed Architecture for Robust Service Function Chain Instantiation with Guarantees”

Overview of IEEE NetSoft Conference 2017 Best Paper: “Catena: A Distributed Architecture for Robust Service Function Chain Instantiation with Guarantees”

Flavio Esposito, Saint Louis University, USA

IEEE Softwarization, July 2017

To offer wide-area distributed virtual network services, resources from multiple end-users and federated or geo-distributed) providers need to be managed. Such virtual resource management is, however, very complex, given the scale and constraints required by various applications. Moreover, multiple virtual network functions need to coexist in support of service function chains. For example, in an edge computing scenario supporting first responders in case of a natural or man-made disaster, or to support remote data-intensive edge processing for medical applications, service functions involving edge clouds (i.e. edge functions) are needed. Edge functions may range from management plane mechanisms, such as load balancing, firewalls, data acceleration, intrusion detection, network address translators or deep-packet inspections to data plane mechanisms, such as congestion control and network scheduling. Orchestrating such edge functions in a multi-tenant scenario requires instantiation and programmability of several network policies (not merely forwarding), to adapt to the dynamic nature of the service. By policy, we mean a variant aspect of any network mechanism, e.g., a desirable high-level goal dictated by users, applications, (5G or edge) infrastructure or service providers. Many systems for distributed service function chain orchestration are actively being developed (see e:g:, [1].) However, the ability to guarantee convergence and performance of multiple (virtualized) instances of a service function chain in a fully distributed fashion is still missing.

To this end, we designed and implemented Catena, (“chain” in Italian), a system that guarantees quick, resilient and fully distributed orchestration of a service (edge) function, i:e:, virtual path discovery, service chain mapping and resilient chain instantiation. Our Catena architecture solves the distributed chain mapping problem with an asynchronous consensus protocol, even in presence of (non byzantine) failures of processes or physical links.

Solutions that provide resilient decentralized asynchronous consensus already exists, see e:g:, the Paxos consensus algorithm [2] (a version of which is used even within Google data centers [3]) or the more recent Raft [4], adopted by the Open Network Operating System (ONOS) [1]. The design behind these protocols is sound, and although these approaches have been subject to recent optimizations and improvements (see e:g:, [5]), none of them simultaneously provides: (i) guarantees on the Pareto optimality of the elected set of leaders, (ii) bounds on the agreement convergence time, and (iii) a fully distributed approach (as opposed to a decentralized one as in [4]). Our Catena architecture bridges this gap with the following contributions:

Architectural contributions: leveraging stochastic optimization theory, we identified the mechanisms and the interactions composing what we called the resilient service function chain instantiation problem. The three necessary and sufficient set of mechanisms to instantiate a chain are: (i) state retrieval, (ii) chain mapping, and (iii) resource binding.

Algorithmic contributions: To efficiently and effectively solve the service chain mapping problem, one of the three mechanisms of the resilient service function chain instantiation problem, we propose a fully Distributed Asynchronous Chain Consensus Algorithm (DACCA), that is guaranteed to converge in the least possible number of messages (showing overhead improvement of up to 32% w.r.t. the Raft [4] consensus), and has probabilistic guarantees on the expected chain instantiation performance, with respect to a Pareto optimal network utility. In a nutshell, DACCA allows edge and core cloud providers to cooperatively instantiate wide-area service function chains with guaranteed convergence and performance, even in presence of failures that do not partition the physical network.

System contributions: We compared our DACCA mechanism simulating a few representative policies and analyzing the tradeoff, and we confirmed our results over a (RINA-based) prototype implementation [6], whose link is available on the main paper [7]. Our evaluation of Catena showed a few surprising results, among which the lower overhead with respect to the Raft [4] consensus protocol, and an higher acceptance rate resulting from a sequential sub-chain request, i:e:, each hosting process should attempt to map a single chain node at the time. Our released Catena prototype can be used by the community interested in 5G and edge cloud provider mechanism design for chain instantiation policy tradeoff analysis.

References

[1] P. Berde, M. Gerola, J. Hart, Y. Higuchi, M. Kobayashi, T. Koide, B. Lantz, B. O’Connor, P. Radoslavov, W. Snow, and G. Parulkar, “Onos: Towards an open, distributed sdn os”, in Proceedings of the Third Workshop on Hot Topics in Software Defined Networking, ser. HotSDN’14. New York, NY, USA: ACM, 2014, pp. 1–6. [Online]. Available: http://doi.acm.org/10.1145/2620728.2620744

[2] L. Lamport, “The part-time parliament”, ACM Trans. Comput. Syst., vol. 16, no. 2, pp. 133–169, May 1998. [Online]. Available: http://doi.acm.org/10.1145/279227.279229

[3] T. D. Chandra, R. Griesemer, and J. Redstone, “Paxos made live: An engineering perspective”, in Proceedings of the Twenty-sixth Annual ACM Symposium on Principles of Distributed Computing, ser. PODC ’07, 2007, pp. 398–407.

[4] D. Ongaro and J. Ousterhout, “In search of an understandable consensus algorithm”, in 2014 USENIX Annual Technical Conference (USENIX ATC 14), Jun. 2014, pp. 305–319.

[5] L. Lamport, “Fast paxos,” Distributed Computing, vol. 19, no. 2, pp. 79–103, 2006. [Online]. Available: http://dx.doi.org/10.1007/s00446-006-0005-x

[6] F. Esposito, Y. Wang, I. Matta, and J. Day, “Dynamic Layer Instantiation as a Service”, in In Proc. of 10th USENIX Symp. on Networked Systems Design and Implementation (NSDI 2013), Lombard, IL, April 2013, p. 98.

[7] F. Esposito, “Catena: A Distributed Architecture for Robust Service Function Chain Instantiation with Guarantees”, in Proc. of IEEE 3rd Conf. on Network Softwarization (NetSoft 2017), Bologna, Italy, July 2017.

* Best Paper at NetSoft 2017

Flavio Esposito is an Assistant Professor of Computer Science at Saint Louis University, in Missouri, USA and he is a visiting professor by courtesy at University of Missouri, Columbia. He got his PhD in Computer Science from Boston University, USA, in 2013, and his Master of Science in Telecommunication Engineering at University of Florence, Italy.

Before joining Saint Louis University, Flavio has worked on data and network management problems as research scientist and software engineer at Exegy, an high frequency trading company in Saint Louis, MO, at Raytheon BBN Technologies, in Cambridge MA, and at Bell Laboratories, NJ. Flavio has also worked as visiting researcher at Eurecom, France, and in two research centers affiliated with the University of Oulu, Finland: Centre for Wireless Communications and MediaTeam. Flavio is currently managing an NSF award and he is a recipient of a best paper award at IEEE NetSoft 2017.

Overview of IEEE NetSoft Conference 2017 Paper: “X–MANO: Cross–domain Management and Orchestration of Network Services”

Overview of IEEE NetSoft Conference 2017 Paper: “X–MANO: Cross–domain Management and Orchestration of Network Services”

Antonio Francescon, FBK CREATE-NET, Italy; Giovanni Baggio, FBK CREATE-NET, Italy; Riccardo Fedrizzi, FBK CREATE-NET, Italy; Ramon Ferrus, UPC, Spain; Imen Grida Ben Yahia, Orange Labs, France; and Roberto Riggio, FBK CREATE-NET, Italy

IEEE Softwarization, July 2017

The orchestration of network services is a well investigated problem. Standards and recommendation have been produced by ETSI and IETF while a significant body of scientific literature can be found exploring both the theoretical and practical aspects of the problem. Likewise several open–source as well as proprietary tools for network service orchestration are already available. Nevertheless, in most of these cases network services can only be provisioned across a single administrative domain effectively preventing end–to–end network service delivery across multiple Infrastructure Providers (InP).

One of the main differences between single–domain and multi–domain network service orchestration is the level of awareness of the involved Domain Orchestrator/Managers (DOMs) about the whole process. In the single–domain scenario the DOM has the whole situation under its control. Conversely, in the multi–domain scenario such global view is missing, since usually the different DOMs are not designed to interact with each other and to share information about the network service deployment process.

Composing resources from different InPs under a single umbrella framework without imposing requirements or restrictions on the different InPs is one of the multi–domain network service orchestration biggest challenges. In particular each InP shall be allowed to orchestrate its part of the network service according to its own internal administrative policies without having to disclose confidential information, such as traffic matrices and internal topology, to the other InPs involved in the service. As a result, existing NFV Management and Orchestration frameworks that assume global network knowledge [1], [2], are not applicable.

In this paper we take a step in the direction of enabling cross–domain network service orchestration by introducing the X–MANO framework. X-MANO consists in a confidentially–preserving interface for inter–domain federation and in a set of abstractions (backed by a consistent information model) enabling network service life–cycle programmability. Said abstractions tackle all the aspect of cross–domain network service provisioning including on–boarding, scaling, and termination.

X-MANO has the following features:

- Flexible deployment model. Several architectures, each driven by different business requirements and use cases, can be used to enable multi– domain network service orchestration. The simplest approach is the hierarchical one [3] where different domains rely on a centralized orchestrator. From a business point–of–view this is a viable solution only for a single administrative domain as different operators will hardly provide global control of their infrastructures to a third party. Another approach is the cascading (or recursive) one where an operator exploits the network services exposed by another operator to serve its customers (e.g. a mobile network operator using a satellite operator for back–hauling). Finally, in the peer–to–peer model a network service is provided by pooling resources across several InPs possibly covering different geographical/technological domains. X–MANO supports all the use cases and architectural solutions described above by introducing a flexible, deployment–agnostic federation interface between heterogeneous administrative and technological domains.

- Confidentiality-preserving federation. In the single–domain case, network service orchestration is performed assuming complete knowledge of the underlying resources. While this is still a valid assumption in the case of network services spanning across heterogeneous technological domains that belongs to the same operator, confidentially will be broken when multiple administrative domain are introduced. Similar considerations are made by the authors of [4], [5], [6]. X–MANO addresses this requirement by introducing an information model enabling each domain to advertise in a confidentially–preserving fashion capabilities, resources, and VNFs to an external entity. A Multi–Domain Network Service Descriptor allows network service developers to define network services without being exposed to the implementation details of the single domains.

- Programmable network services. Irrespectively of the number of administrative and/or technological domains involved, VNFs and network services have specific life–cycle management requirements. For example, a video transcoding VNF may require a streaming VNF to be configured and running before it can start operating. Similarly an initialization script may require as input the output of other initialization scripts. As a result when VNFs belonging to the same network service are deployed across different domains it becomes harder to ensure consistent service on–boarding, scaling, and termination. This is due to the fact that different orchestrators must cooperate in order to deploy and operate a single network service. X–MANO addresses this requirement by introducing the concept of programmable network service which relies on a domain specific scripting language in order to allow network service developers to implement custom lifecycle management policies.

We validated the X-MANO framework by implementing it in a proof–of– concept prototype and by using it to deploy a video transcoding network services in a multi–domain InP testbed. Finally, we release the proof–of–concept X–MANO implementation under a permissive APACHE 2.0 license making it available to researchers and practitioners [7].

References

[1] OpenBaton:” [Online]. Available: https://openbaton.github.io/

[2] OPNFV: [Online]. Available: https://www.opnfv.org/

[3] European Telecommunications Standards Institute (ETSI), Network Functions Virtualisation (NFV); Management and Orchestration; Report on Architectural Options, Std. ETSI GS NFV-IFA 009, July 2016.

[4] M. Chowdhury, F. Samuel, and R. Boutaba, “Polyvine: policy-based virtual network embedding across multiple domain,”, in Proc. of ACM VISA, New Delhi, India, 2010.

[5] T. Mano, T. Inoue, D. Ikarashi, K. Hamada, K. Mizutani, and O. Akashi, “Efficient virtual network optimization across multiple domains without revealing private information”, IEEE Transactions on Network and Service Management, vol. 13, no. 3, pp. 477–488, Sept 2016.

[6] C. Bernardos, L. Contreras, and I. Vaishnavi, “Multi-domain net- work virtualization”, Working Draft, Internet-Draft draft-bernardos- nfvrg-multidomain-01, October 2016.

[7] X-MANO: [Online]. Available: https://github.com/5g-empower/x-mano

Antonio Francescon is a Researcher Engineer at FBK CREATE-NET. He received his master degreee in Computer Science Engineering in 2005 from the University of Padua (Italy). Since June 2005 he has been working in CREATE-NET, designing and developing software both for embedded devices and for Optical Network Management (here mainly focusing on development of emulators for testing new Control Plane solutions). He has been involved in several European project such as DICONET-FP7 and CHRON-FP7 (Optical Networks), COMPOSE-FP7 and UNCAP-H2020 (IoT). His current research interest is in Network Function Virtualization, in particular on cross-domain Network Service orchestration (his main activity in the VITAL-H2020 EU project).

Antonio Francescon is a Researcher Engineer at FBK CREATE-NET. He received his master degreee in Computer Science Engineering in 2005 from the University of Padua (Italy). Since June 2005 he has been working in CREATE-NET, designing and developing software both for embedded devices and for Optical Network Management (here mainly focusing on development of emulators for testing new Control Plane solutions). He has been involved in several European project such as DICONET-FP7 and CHRON-FP7 (Optical Networks), COMPOSE-FP7 and UNCAP-H2020 (IoT). His current research interest is in Network Function Virtualization, in particular on cross-domain Network Service orchestration (his main activity in the VITAL-H2020 EU project).

Giovanni Baggio received his master degree in Telecommunication Engineering from the university of Trento, Italy in 2015. He is currently Research Engineer at FBK CREATE-NET where he is involved in the H2020 Vital project as system designer, in the past he was also involved H2020 Fed4FIRE project as software developer. Past experiences prior to the degree include various university projects and a job activity as system administrator for few local SMEs.

Giovanni Baggio received his master degree in Telecommunication Engineering from the university of Trento, Italy in 2015. He is currently Research Engineer at FBK CREATE-NET where he is involved in the H2020 Vital project as system designer, in the past he was also involved H2020 Fed4FIRE project as software developer. Past experiences prior to the degree include various university projects and a job activity as system administrator for few local SMEs.

Riccardo Fedrizzi received his MS degree in Telecommunications Engineering from the University of Trento with a thesis focused on adaptive techniques for multi-user detection over time-varying multipath fading channel in MC-CDMA systems. After the graduation, he worked at the Department of Information Engineering and Computer Science of Trento, with main interest in the study and development of "software defined radio" technologies, for broadband data transmission over wireless networks. He joined CREATE-NET in January 2009 working on different EU founded projects in the wireless networks field.

Ramon Ferrús received the Telecommunications Engineering (B.S. plus M.S.) and Ph.D. degrees from the Universitat Politècnica de Catalunya (UPC), Barcelona, Spain, in 1996 and 2000, respectively. He is currently a tenured associate professor with the Department of Signal Theory and Communications at UPC. His research interests include system design, functional architectures, protocols, resource optimization and network and service management in wireless communications, including satellite communications. He has participated in several research projects within the 6th, 7th and H2020 Framework Programmes of the European Commission, taking the responsibility as WP leader in H2020 VITAL and FP7 ISITEP projects. He has also participated in numerous national research projects and technology transfer projects for public and private companies. He is co-author of one book on mobile communications and one book on mobile broadband public safety communications. He has co-authored over 100 papers published in peer-reviewed journals, magazines, conference proceedings and workshops.

Ramon Ferrús received the Telecommunications Engineering (B.S. plus M.S.) and Ph.D. degrees from the Universitat Politècnica de Catalunya (UPC), Barcelona, Spain, in 1996 and 2000, respectively. He is currently a tenured associate professor with the Department of Signal Theory and Communications at UPC. His research interests include system design, functional architectures, protocols, resource optimization and network and service management in wireless communications, including satellite communications. He has participated in several research projects within the 6th, 7th and H2020 Framework Programmes of the European Commission, taking the responsibility as WP leader in H2020 VITAL and FP7 ISITEP projects. He has also participated in numerous national research projects and technology transfer projects for public and private companies. He is co-author of one book on mobile communications and one book on mobile broadband public safety communications. He has co-authored over 100 papers published in peer-reviewed journals, magazines, conference proceedings and workshops.

Imen Grida Ben Yahia is currently with Orange Labs, France, as a Research Project Leader on Autonomic & Cognitive Management. She received her PhD degree in Telecommunication Networks from Pierre et Marie Curie University in conjunction with Télécom SudParis in 2008. Imen joined Orange in 2010 as Senior Research Engineer on Autonomic Networking. Her current research interests are autonomic and cognitive management for software and programmable networks that include artificial intelligence for SLA and fault management, knowledge and abstraction for management operations, intent- and policy-based management. As such, she contributed to several European research projects like Servery, UniverSelf and CogNet and authored several scientific conference and journal papers in the field of autonomic and cognitive management. Imen will be the TPC co-chair of Netsoft2018.

Imen Grida Ben Yahia is currently with Orange Labs, France, as a Research Project Leader on Autonomic & Cognitive Management. She received her PhD degree in Telecommunication Networks from Pierre et Marie Curie University in conjunction with Télécom SudParis in 2008. Imen joined Orange in 2010 as Senior Research Engineer on Autonomic Networking. Her current research interests are autonomic and cognitive management for software and programmable networks that include artificial intelligence for SLA and fault management, knowledge and abstraction for management operations, intent- and policy-based management. As such, she contributed to several European research projects like Servery, UniverSelf and CogNet and authored several scientific conference and journal papers in the field of autonomic and cognitive management. Imen will be the TPC co-chair of Netsoft2018.

Dr. Roberto Riggio is currently Chief Scientist at FBK CREATE-NET where he is leading the Future Networks Research Unit efforts on 5G Systems. He has 1 granted patent, 79 papers published in internationally refereed journals and conferences, and has generated more than 1.5 M€ in competitive funding. He received several awards including: the IEEE INFOCOM 2013 Best Demo Award, the IEEE ManFI 2015 Best Paper Award, and the IEEE CNSM 2015 Best Paper Award. He has extensive experience in the technical and project management of European and industrial projects and he is currently Project Manager of the H2020 Vital Project.

Dr. Roberto Riggio is currently Chief Scientist at FBK CREATE-NET where he is leading the Future Networks Research Unit efforts on 5G Systems. He has 1 granted patent, 79 papers published in internationally refereed journals and conferences, and has generated more than 1.5 M€ in competitive funding. He received several awards including: the IEEE INFOCOM 2013 Best Demo Award, the IEEE ManFI 2015 Best Paper Award, and the IEEE CNSM 2015 Best Paper Award. He has extensive experience in the technical and project management of European and industrial projects and he is currently Project Manager of the H2020 Vital Project.