Telco Cloud NFV Metrics and Performance Management

Marie-Paule Odini, HPE

IEEE Softwarization, May 2017

Quality of Service and Quality of Experience are key characteristics of Telco environments. As NFV deploys, metrics, performance measurement and benchmarking are getting more and more important for Telco Cloud to deliver best in class services. If we consider performance management across a classical ETSI NFV architecture, we have different building blocks to consider: the NFV Infrastructure (NFVI), the Management and Orchestration (MANO) stack, the different Virtual Network Functions (VNF) and Network Services (NS). A number of metrics need to be defined when designing each of these component, then some methods need to be implemented to collect these metrics appropriately and interfaces need to be available to carry the results across the architecture to different ‘authorized and subscribed consumers’ of the metrics. Then performance measurements can be done either as part of pre-validation, with simulated steady traffic or with peak of traffic. But performance measurements can also be done on a live environment on an ongoing basis or on demand to check the behaviour of the network. Two types of measurements are typically performed: Quality of Service, ensuring that the network behaves according to expectations, or Quality of Experience, ensuring the user perception of the network and service quality is according to expectations. These different concepts have been described in some initial specifications in ETSI NFV, i.e. PER001 [1], then further refined in other specifications that will be detailed below. In parallel, a number of tools have been designed by the open source community, in particular in the context of the OPNFV collaborative project [15]. Other standard organizations have also specified benchmarking, for instance IETF. Strong collaboration has occurred between those different entities and contributors to ensure consistency and complementary work. As NFV is touching on many different areas such as Telco Cloud, fixed and mobile network functions, customer premises environment, management platform and processes, service deployment and operation, many different types of metrics have to be defined and collected. It became obvious that a number of metrics could be leveraged from existing standards in some of these areas, but also that some overlaps or inconsistencies existed when mapping those metrics to the ETSI NFV architecture. As a result, an initiative was triggered across the industry in order to align metrics for NFV across key stakeholders. In parallel, a few telecom operators advanced in NFV deployment, such as Verizon [16], have issued requirements in terms of metrics they want suppliers to provide. Finally, as technology evolves, with new hardware, networking and virtualization capabilities, metrics and measurement methods and tools get also to evolve. New usage also drives new performance requirements that drive new technologies, metrics and measurement capabilities. In conclusion, NFV metrics and performance management is a long journey and this paper is just giving an introduction and update on some of the current highlights.

Definition and terms used in this paper

Metric: standard definition of a quantity, produced in an assessment of performance and/or reliability of the network, which has an intended utility and is carefully specified to convey the exact meaning of a measured value.

NOTE: This definition is consistent with that of Performance Metric in IETF RFC 2330 [8], ETSI GS NFV-PER001 [1] and ETSI GS NFV INF010 [2].

Measurement: set of operations having the object of determining a Measured Value or Measurement Result.

NOTE: The actual instance or execution of operations leading to a Measured Value. Based on the definition of measurement in IETF RFC 6390 [11], as cited in ISO/IEC 15939 and used in ETSI GS NFV INF010 [2].

NFV: Network Function Virtualization, as defined by ETSI NFV. NFV consists into virtualizing network functions for fixed and mobile networks and provide a managed virtualized infrastructure with compute, network, storage virtual resources that will allow the proper deployment and operation of those virtualized network functions to deliver predictive and quality communication services.

NFV architecture and standard metrics

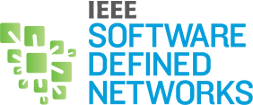

Considering the ETSI NFV reference architecture, metrics can be collected on any of the components of this architecture as described below in Fig 1.

Fig 1 – Metrics in an NFV reference architecture

Initial work on performance and metrics was conducted in ETSI NFV PER001 [1] which was very focused on the NFV Infrastructure (NFVI), providing a list of minimal features and requirements for “VM Descriptor”, “Compute Host Descriptor”, Hardware (HW) and hypervisor to support different workloads such as data-plane or control-plane VNFs typically, and ensure portability. This work has led to the initial work on NFV in the industry, helping developers to best leverage IT systems for telecom functions, and infrastructure provider to enhance their system capabilities to meet telecom virtualized software requirements.

Then ETSI NFV INF010 [2] enumerated metrics for NFV infrastructure (NFVI), management and orchestration (MANO) service qualities that can impact the end user service qualities delivered by VNF instances hosted on NFV Infrastructures. These “service quality metrics” cover both direct service impairments, such as IP packets lost by NFV virtual networking which impacts end user service latency or quality of experience, and indirect service quality risks, such as NFV management and orchestration failing to continuously and rigorously enforce all anti-affinity rules which increases the risk of an infrastructure failure causing unacceptable VNF user service impact.

The objective of [2] is to define explicitly some metrics between Service Providers who provide NFVI and MANO, and Suppliers who provide VNF, to ensure that resources provided by Service Providers including operational environments provide the level of performance that is required for given VNF to deliver predictive quality of service for given end user service. Table 1 is summarizing all the different metrics (source: ETSI NFV INF010 [2]).

Table 1: Summary of NFV Service Quality Metrics

| Service Metric Category | Speed | Accuracy | Reliability |

|---|---|---|---|

| Orchestration Step 1 (e.g. Resource Allocation, Configuration and Setup) | VM Provisioning Latency | VM Placement Policy Compliance | VM Provisioning Reliability VM Dead-on-Arrival (DOA) Ratio |

| VirtualMachine operation | VM Stall (event duration and frequency) VM Scheduling Latency |

VM Clock Error | VM Premature Release Ratio |

| Virtual Network Establishment | VN Provisioning Latency | VN Diversity Compliance | VN Provisioning Reliability |

| Virtual Network operation | Packet Delay Packet Delay Variation (Jitter) Delivered Throughput |

Packet Loss Ratio | Network Outage |

| Orchestration Step 2 (e.g. Resource Release) | Failed VM Release Ratio | ||

| Technology Component as-a-Service | TcaaS Service Latency | - | TcaaS Reliability (e.g. defective transaction ratio) TcaaS Outage |

ETSI NFV IFA003 [6] is focusing on one important element of the NFV infrastructure which is virtual switch (vswitch) and defining performance metrics for benchmarking vswitch in different use cases, being overlay, traffic filtering, load balancing, etc – It is also defining underlying NFVI Host and VNF characteristics description that will ensure the consistency of the performance benchmarks. Examples of NFVI host parameters includes OS and kernel version, hypervisor type and version, etc.

ETSI NFV TST008, “NFVI Compute and Network Metrics Specification” [5], is defining normative metrics for Compute, Network and Memory. It is also defining the method of measurement and the sources of error. This specification is coupled with IFA027 [4] that will carry these metrics across the MANO interfaces to the different interested entities.

And finally ETSI NFV IFA027, “Performance measurement specifications” [4], is work in progress to define performance measurements for each of the ETSI NFV MANO Interfaces: Vi-Vnfm, Ve-Vnfm-em, Or-Vnfm, Or-Vi and Os-Mo-nfvo. The objective is to have a normative specification standardizing the performance metrics that are carried across the difference interfaces.

VNF benchmarking

Besides defining metrics and being able to generate and collect measurements, it is quite important while designing a VNF to be able to properly size the set of resources required to meet given performance. Several publications address this topic either focusing on specific function benchmarking, i.e. vswitch [6] [7], firewall [9], IMS [13], or beyond that, defining a more generic methodology and platform [10] to perform VNF benchmarking whatever the VNF is: simple VNF with only one VNFC, or complex VNF with multiple VNFC, data and control plane, intense compute, I/O or memory usage.

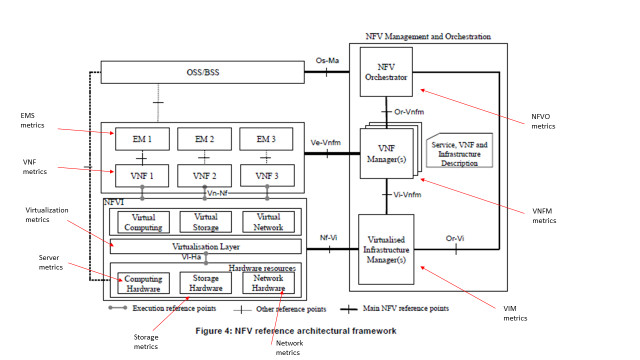

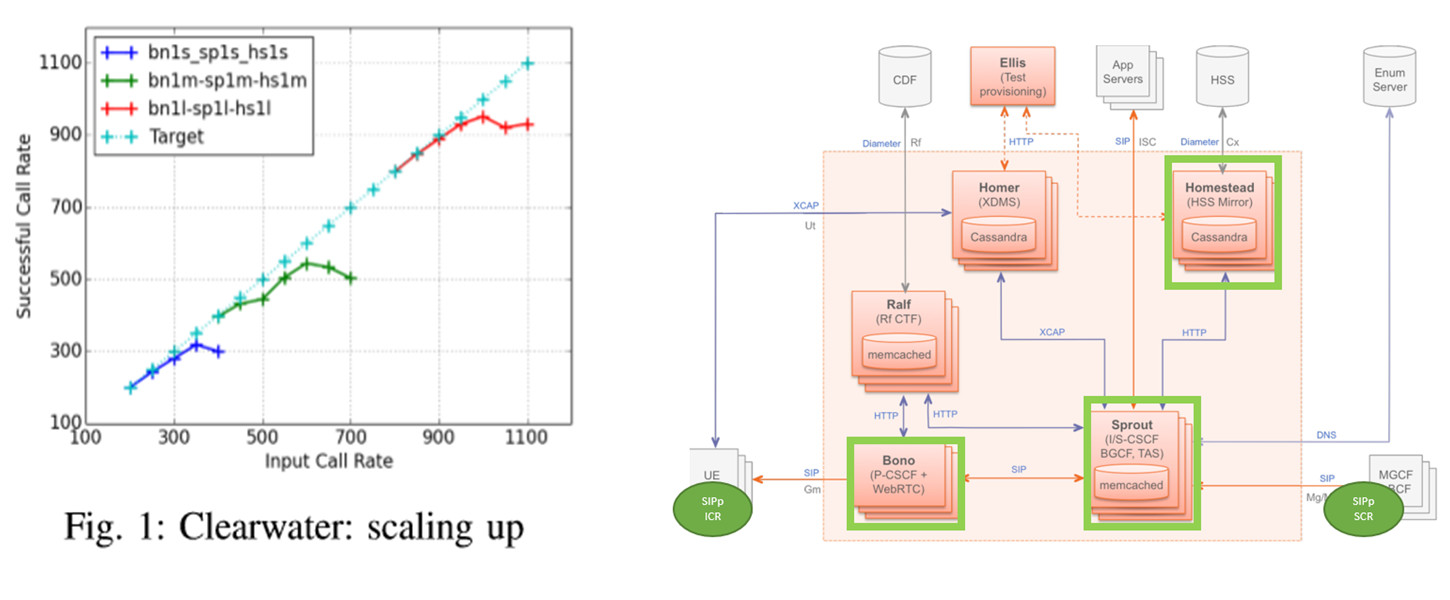

NFV Vital [13] is one of these methodologies and platforms that studied different use cases, including performance of the opensource virtual IMS ‘Clearwater’. The diagram below shows the ‘best’ performance when scaling the different deployment flavors: s (small), m (medium) and l (large), typically 1000 calls for the large (red) configuration.

Fig 2 – IMS VNF Benchmarking with NFV Vital and opensource vIMS Clearwater

Opensource tools

OpenStack as an Opensource Virtual Infrastructure Manager (VIM) is providing a number of tools for performance and benchmarking that can be leveraged for the NFVI.

- OpenStack Rally: provides a framework for performance analysis and benchmarking of individual OpenStack components as well as full production OpenStack cloud deployments. For instance on Nova compute module, this allows to measure time to boot a VM, delete a VM, etc.

- OpenStack Monasca: provides a framework to define, collect metrics, set alarms and statistics. Monasca already includes an analytics module that leverages famous Opensource programs such as message bus Kafka, Zookeeper and other Hadoop ecosystem components.

- OpenStack Ceilometer: collects, normalises and transforms data produced by OpenStack services. Three types of meters are used: Cumulative, Delta and Gauge and a set of measurements are defined for different modules: OpenStack Compute, Networking, Block/Object Storage but also Bare metal, SDN controllers, Load Balancer-aaS (as a Service), VPNaaS, Firewall-aaS or Energy.

OPNFV is the Linux Foundation open source project that is developing a reference implementation of the NFV architecture. OPNFV leverages as much as possible existing code and tools from upstream open source projects that contribute to NFV architecture such as OpenStack but also OVS (Open VSwitch), OpenDaylight (SDN Controller), etc. OPNFV has defined a set of ‘umbrella’ tools for testing NFV environments. Among those, some of them are more specifically focused on performance management such as Qtip, StorPerf and vsperf project tools.

- OPNFV Qtip is an OPNFV project that provides a framework to automate the execution of benchmark tests and collect the results in a consistent manner to allow comparative analysis of the results.

- OPNFV Vsperf is another OPNFV project that provides a set of tools for VSwitch performance benchmarking. It provides an automated test-framework and comprehensive test suite based primarily on RFC2544 [10] and IFA003 [6], sending fixed size packets at different rates or fixed rate, for measuring data-plane performance of Telco NFV switching technologies as well as physical and virtual network interfaces (NFVI). It supports Vanilla OVS and OVS with DPDK (Data Plane Development Kit).

- OPNFV Storperf defines metrics for Block and Object Storage and provides a tool to measure performance in an NFVI. OPNFV current release “Danube” provides performance metrics monitoring. For Block Storage, it is assuming iSCSI-attached storage, though local direct attached storage, or Fibre Channel-attached storage could also be tested. For Object Storage, it is assuming an HTTP-based API, such as OpenStack Swift for accessing object storage. Typical metrics are IOPS (input/output operations per second) and latency for block storage, and TPS (Transaction Per Second ), error rate and latency for object storage. Throughput calculations are based on the formulae:

(1) Throughput = IOPS * block size (Block), or

(2) Throughput = TPS * object size (Object).

- OPNFV incubating Barometer – this new OPNFV project, very aligned with ETSI NFV TST008 [5], will provide interfaces to support monitoring of the NFVI. Draft metrics include Compute (CPU/vCPU, memory/vmemory, cache utilizations + HW: thermal, fan speed), Network (packets, octets, dropped packets, error frames, broadcast packets, multicast packets, TX and RX) and Storage (disk utilization, etc.).

Telco Cloud evolution and new metrics

As Telco Cloud evolves embracing new technologies such as new hardware platforms for the NFVI, including hardware accelerators such as FPGA for instance, or Smart NIC, but also new virtualization engine such as containers, or SDN based networking, new metrics are being defined that either vendors provide through some open API, or that can be measured with some black box methods. Standard interfaces may also need to be updated to support these new data collection and distribution mechanism. Moving to 5G some new requirements also start to materialize, such as network slicing end to end performance which require some new performance measurement mechanisms and correlation that keep up with the dynamicity of the network and associated virtual resources. A typical example is video streaming through a network slice, where network metrics (QoS) and customer quality of experience (QoE) measurements comparison may trigger some tuning of the slice adding some virtual resources, for example additional bandwidth, more vCPU to process the video, or inserting some VNF in the service chain, such as video optimization.. Last but not least, with analytics and machine learning, predictive analytics can support performance analysis by processing data which are not just classical ‘collected’ metrics, but by comparing behaviors, such as network, application, consumer or device behaviors, deriving performance degradation or improvement and identifying root cause. As networks get more programmatic and more dynamic, the combination of performance management with metrics data collection and broader analytics will become more and more relevant.

References

[2] “Service Quality Metrics“, ETSI GS NFV-INF010, V1.1.1 (2014-12)

[3] “Report on Quality Accountability Framework”, ETSI GS NFV-REL005 V1.1.1 (2016-01)

[4] “Performance Measurement Specifications”, ETSI GS IFA027, work in progress

[5] “NFVI Compute and Network Metrics Specification”, ETSI GS TST008

[6] “vSwitch Benchmarking and Acceleration Specification”, ETSI GS NFV-IFA003 v2.1.1 (2016-04)

[7] Tahhan, M., O’Mahony, B., and A. Morton, "Benchmarking Virtual Switches in OPNFV", draft-vsperf-bmwg-vswitchopnfv-00 (work in progress), July 2015.

[8] “Framework for IP Performance Metrics”, IETF RFC2330

[9] "Benchmarking Methodology for Firewall Performance", IETF RFC 3511

[10] “Benchmarking Methodology for Network Interconnect Devices”, IETF RFC2544

[11] “Guidelines for Considering New Performance Metric Development”, IETF RFC6390

[12] TL 9000 Measurements Handbook, release 5.0, July 2012, QuestForum

[13] NFV Vital: A Framework for Characterizing the Performance of Virtual Network Functions, IEEE, Nov 2015

[14] OpenStack: https://www.openstack.org/

[15] OPNFV: https://www.opnfv.org/

[16] Verizon SDN-NFV Reference Architecture

Marie-Paule Odini holds a master's degree in electrical engineering from Utah State University. Her experience in telecom experience including voice and data. After managing the HP worldwide VoIP program, HP wireless LAN program and HP Service Delivery program, she is now HP CMS CTO for EMEA and also a Distinguished Technologist, NFV, SDN at Hewlett-Packard. Since joining HP in 1987, Odini has held positions in technical consulting, sales development and marketing within different HP organizations in France and the U.S. All of her roles have focused on networking or the service provider business, either in solutions for the network infrastructure or for the operation.

Marie-Paule Odini holds a master's degree in electrical engineering from Utah State University. Her experience in telecom experience including voice and data. After managing the HP worldwide VoIP program, HP wireless LAN program and HP Service Delivery program, she is now HP CMS CTO for EMEA and also a Distinguished Technologist, NFV, SDN at Hewlett-Packard. Since joining HP in 1987, Odini has held positions in technical consulting, sales development and marketing within different HP organizations in France and the U.S. All of her roles have focused on networking or the service provider business, either in solutions for the network infrastructure or for the operation.

Editor:

Stefano Salsano is Associate Professor at the University of Rome Tor Vergata. His current research interests include Software Defined Networking, Information-Centric Networking, Mobile and Pervasive Computing, Seamless Mobility. He participated in 16 research projects funded by the EU, being Work Package leader or unit coordinator in 8 of them (ELISA, AQUILA, SIMPLICITY, Simple Mobile Services, PERIMETER, OFELIA, DREAMER/GN3plus, SCISSOR) and technical coordinator in one of them (Simple Mobile Services). He has been principal investigator in several research and technology transfer contracts funded by industries (Docomo, NEC, Bull Italia, OpenTechEng, Crealab, Acotel, Pointercom, s2i Italia) with a total funding of more than 1.3M€. He has led the development of several testbeds and demonstrators in the context of EU projects, most of them released as Open Source software. He is co-author of an IETF RFC and of more than 130 papers and book chapters that have been collectively cited more than 2300 times. His h-index is 27.

Stefano Salsano is Associate Professor at the University of Rome Tor Vergata. His current research interests include Software Defined Networking, Information-Centric Networking, Mobile and Pervasive Computing, Seamless Mobility. He participated in 16 research projects funded by the EU, being Work Package leader or unit coordinator in 8 of them (ELISA, AQUILA, SIMPLICITY, Simple Mobile Services, PERIMETER, OFELIA, DREAMER/GN3plus, SCISSOR) and technical coordinator in one of them (Simple Mobile Services). He has been principal investigator in several research and technology transfer contracts funded by industries (Docomo, NEC, Bull Italia, OpenTechEng, Crealab, Acotel, Pointercom, s2i Italia) with a total funding of more than 1.3M€. He has led the development of several testbeds and demonstrators in the context of EU projects, most of them released as Open Source software. He is co-author of an IETF RFC and of more than 130 papers and book chapters that have been collectively cited more than 2300 times. His h-index is 27.

Subscribe to IEEE Softwarization

Join our free SDN Technical Community and receive IEEE Softwarization.

Article Contributions Welcomed

Download IEEE Softwarization Editorial Guidelines for Authors (PDF, 122 KB)

If you wish to have an article considered for publication, please contact the Managing Editor at sdn-editor@ieee.org.

Past Issues

IEEE Softwarization Editorial Board

Laurent Ciavaglia, Editor-in-Chief

Mohamed Faten Zhani, Managing Editor

TBD, Deputy Managing Editor

Syed Hassan Ahmed

Dr. J. Amudhavel

Francesco Benedetto

Korhan Cengiz

Noel Crespi

Neil Davies

Eliezer Dekel

Eileen Healy

Chris Hrivnak

Atta ur Rehman Khan

Marie-Paule Odini

Shashikant Patil

Kostas Pentikousis

Luca Prete

Muhammad Maaz Rehan

Mubashir Rehmani

Stefano Salsano

Elio Salvadori

Nadir Shah

Alexandros Stavdas

Jose Verger