Evolving End to End Telemetry Systems to Meet the Challenges of Softwarized Environments

Michael J. McGrath and Victor Bayon-Molino, Intel Labs Europe, Leixlip, Ireland

IEEE Softwarization, May 2017

The advent of Software Defined Networking (SDN), Network Function Virtualization (NFV), Cloud and Edge Computing is redefining the network and Information Communication Technology (ICT) domains into one which is converged and software orientated to enable a highly flexible service paradigm [1]. This transformation towards ‘softwarization’ is generating significant challenges in how we monitor and manage these new service environments. Traditionally, telemetry has provided the key capability for monitoring both network and ICT infrastructural elements and corresponding services. However, the ‘softwarization’ of functions and running them on heterogeneous infrastructure resource environments built from standard high volume (SHV) components require a significant reimaging of how we use and exploit telemetry. Given the multi-layer composition of software oriented functions which are comprised of numerous moving parts e.g. heterogeneous and distributed infrastructures, virtualization layers (virtual machines, containers etc.), service consolidation etc., a full end-to-end view of services is required. Achieving this view requires a cultural change in how we consider the use of metrics. We need to move from a highly compartmentalized interpretation, i.e. metrics are treated in per function isolation, to one which utilizes metrics across all constituent elements to deliver a full end-to-end service view.

The cloudification of the networking world and new 5G use cases such as connected cars, Internet of Things (IoT), etc. will require a new dynamic, resilient, available and scalable network. This will result in an explosion of telemetry data in terms of volume, velocity and variety. The control and signaling plane of these networks will have to be highly performant in order to support the low latency requirements of network provisioning and adjustment. Fast queries against historical telemetry backends will also be required in order to support sub 10-millisecond round trip times for control of potentially 1000’s of services. These services will be distributed over 10000’s of physical and virtual resources, requiring appropriate exploitation of the telemetry data in order to inform intelligent orchestration driven service management. In this context, the utilization of telemetry in a standalone fashion without the use of sophisticated analytics to filter and transform the enormous volumes of diverse metrics into meaningful and actionable insights that can be used for automation will not be viable.

Conventional monitoring approaches, for example network monitoring, have been based on utilizing different types of non-integrated proprietary architectures and system tools such as ping for reachability and end-to-end latency, traceroute for path availability, network management systems [2], network load and utilization [3] (i.e. standalone metrics), Internet Protocol Flow Information (IPFix)/NetFlow [4], Simple Network Management Protocol (SNMP) [5] (e.g. traps and pulling) etc.. The situation is made more complicated in multi-vendor environments due to the complexity of device heterogeneity in different network segments. This results in different data formats, resolution, sampling rates, non-standard-interfaces and siloed telemetry. These challenges making it very difficult to have a fully correlated and holistic view of the infrastructure and services. Addressing these challenges requires a telemetry fabric which provides an end-to-end platform for metrics collection and processing. The fabric also needs to have multiple hierarchical analytical and actuation points in order to efficiently exploit the metrics.

Hierarchical and Rules Driven Telemetry Systems

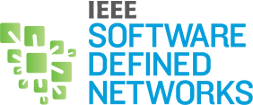

While reporting metrics at fixed periodic intervals and alerts management via ticketing systems and dashboards will remain an important feature, it will become a legacy approach. The next generation of telemetry systems will integrate two logically separated planes, a data plane and telemetry control plane as shown in Figure 1. The data plane provides transport of pre-processed metrics to a scalable backend data repository with appropriate analytics and data visualization capabilities. The telemetry control plane is used to manage and control the runtime behavior of the telemetry fabric enabling or disabling data collection and local processing of the data. Control of the telemetry fabric is executed in an automated manner to support both scalability and responsiveness. Potential approaches include the use of rules or heuristics to define what metrics are collected, how they are processed and published. The rules (collection, processing and publishing) are generated by an analytics backend in an automated manner using approaches such as machine learning for different contexts of interest, e.g. performance, reliability, etc. Once validated, the rules are stored in a repository from where a telemetry control system then selects and deploys the rules to nodes within the telemetry fabric. The richness and variety of the data that the telemetry fabric can provide, from time series data to textual, to contextual and configuration data will allow the development of more effective machine learning models. Local telemetry resources will also be able to reason and act with semi-autonomy, minimizing both data and control traffic. Each metric will be configured individually, from collection rates, to local processing and the telemetry fabric will also have responsibility for the lifecycle of metrics. Appropriate policies will determine both the lifespan of metrics, the resolution and collection rates at the different hierarchical levels within the telemetry fabric and when to enable/disable the collection and processing of metrics. The goal here is to prevent data redundancy over time due to system evolution, and changes in service SLA’s by continuously evaluating the value of metrics from a consumption/utility perspective.

Figure 1: Scalable next generation telemetry fabric architecture

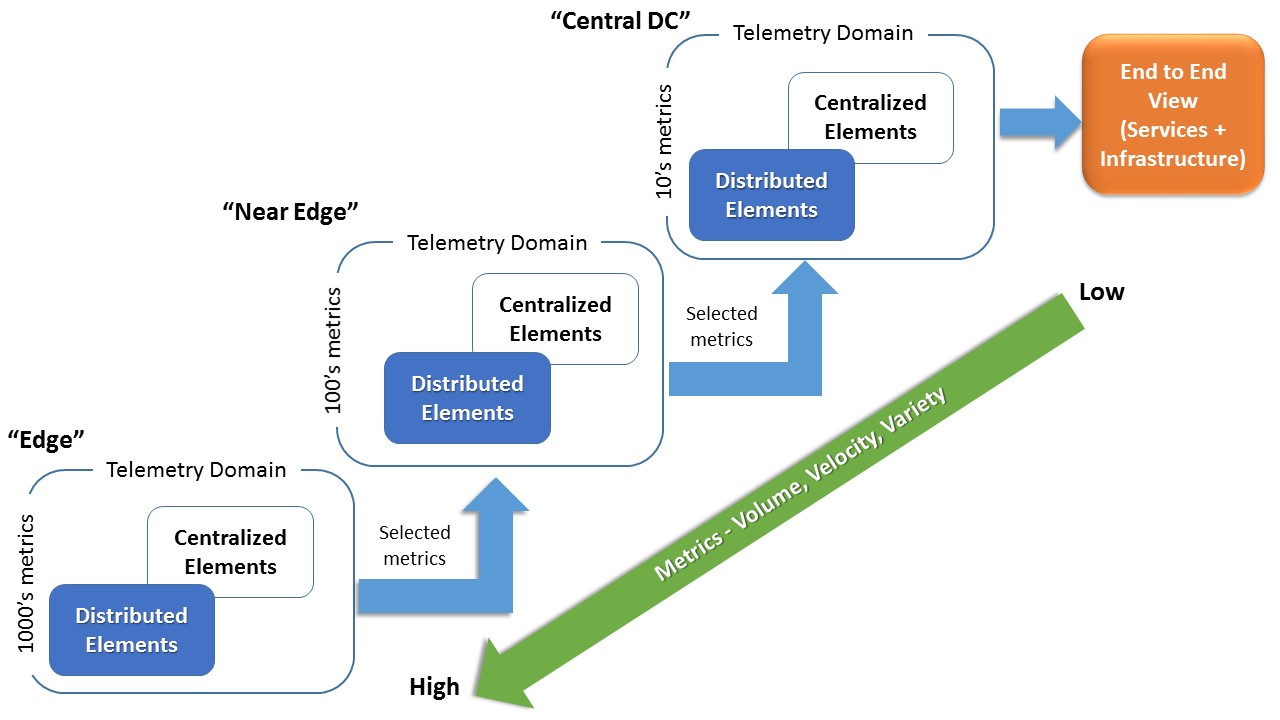

In order to support true scalability, telemetry systems will need to adopt a hierarchical architectural approach. As shown in Figure 2 this architecture separates telemetry collection and processing into domains which map to system architecture constructs. Each telemetry domain has an architecture similar to Figure 1 and is connected to the next higher level domain along an end-to-end chain, exposing selected metrics for consumption through standard APIs. Abstraction of the metrics through the domains is used to provide an end-to-end view of the services and their host infrastructure by reducing thousands of metrics to a handful of metrics for a given context of interest. This end-to-end hierarchical approach where all layers of the softwarized environment can be behaviorally correlated and actuated upon in a real-time will enable better service performance. The approach will also facilitate improved end-user quality of experience, increased efficiency of infrastructure utilization, and preemptive issue identification.

Figure 2: Hierarchical Telemetry Fabric Architecture

As telemetry frameworks evolve with new architectural approaches to meet the challenges of softwarization environments, the following general capabilities will be required:

- A low node level resource footprint for telemetry service agents.

- Dedicated control and data planes.

- Dynamically tunable telemetry (up-sampling, down-sampling and filtering at source)

- Support for rich and varied data sources: from time series to frequency domain (e.g. tracing) to textual (e.g. logs) to structured (e.g. configuration) to network topologies, etc.

- Integration with analytics pipelines (e.g. machine learning model production, management and model execution and evaluation) as well as batch and real-time (streaming) operation modes.

- Hierarchical telemetry architecture with multiple analytical and actuation points across the fabric to support advanced machine learning execution across the infrastructure landscape.

- Trusted infrastructure ingredient level metrics g. exposure of low level counters not currently available.

- Support for 10000’s of devices with 100’s of metrics per resource at second and sub-second (frequency domain) sampling rate with enhanced edge pre-processing.

- Move from user-defined rules/heuristics and manual management by exception to an active and automated way to control and manage infrastructure fabrics.

- Integration of telemetry system APIs with infrastructure automation. This integration will facilitate the creation of machine learning models and rules that can be CRUD (Created, Read, Updated, Delete) across any level of the hierarchy.

Evolving Metrics

While the architectural evolution of telemetry platforms for softwarization environments is an important consideration, new metrics will also be required to monitor the unique characteristics of these environments. For example in deployments where multiple Virtualized Network Functions (VNFs) are consolidated on the same physical compute node in a multi-tenanted environment, how do we measure the effectiveness of isolation which is important in the quantification of noisy neighbor effects? Existing telemetry collection frameworks are optimized primarily for health monitoring (e.g. status checking via SNMP) and not for observing a wide range of system behaviors and characteristics. Exposure of new metrics at the hardware, virtualization and service layers will be required. Some vendors are already moving towards an all-streaming plus analytics approach to telemetry [6] for visibility and granular control. As the network world moves towards solutions based on merchant silicon and industry standard platforms (e.g. x86 on the control plane of switches), new networking platforms will integrate instrumentation and telemetry as a main feature of their architectures [7]. The ability to expose fine grained ingredient (Central Processing Unit (CPU), chipset, Network Interface Card (NIC), Solid State Drive (SSD), etc.) level metrics will become an important platform differentiation and a source of new infrastructural insights [8]. The ability to trust the source of metrics data will also become increasingly important. Telemetry which is ‘signed’ at source in a manner that supports appropriate verification will become a necessary feature especially when used for actuation purposes.

Conclusions

The introduction of SDN, NFV, Cloud and Edge Computing technologies is driving significant and rapid changes in the ICT domain. Key among these changes is the realization of a domain that is significantly more converged, flexible and software orientated. Telemetry systems need to evolve to deal with the ever increasing velocity, volume and variety of data becoming available from these softwarized environments. New telemetry architectures will feature separate data plane and control planes to support scalability and responsiveness. Analytical approaches such as machine learning will be used to define telemetry system behaviors such as determining what metrics are collected, when they are collected and how they are processed and to facilitate end-to-end autonomous service and infrastructure management.

References

- Antonio Manzalini, et al., IEEE SDN Initiative (SDN4FNS) white paper, Towards 5G Software-Defined Ecosystems - Technical Challenges, Business Sustainability and Policy Issues, Jan 2014, Available: http://sdn.ieee.org/publications.

- "Open Network Management System," [Online]. Available: https://www.opennms.org/en.

- Hassidim, D. Raz, M. Segalov and A. Shaqed, "Network Utilization: The Flow View," in IEEE INFOCOM 2013, Turin, Italy, 2013.

- "NetFlow," [Online]. Available: https://en.wikipedia.org/wiki/NetFlow.

- "SNMP," [Online]. Available: https://en.wikipedia.org/wiki/Simple_Network_Management_Protocol.

- "Telemetry and Analytics," [Online]. Available: https://www.arista.com/en/solutions/telemetry-analytics.

- "Broadcom BCM56960-Series," [Online]. Available: https://www.broadcom.com/products/Switching/Data-Center/BCM56960-Series.

- "Intel Performance Counter Monitor. PCM," [Online]. Available: https://software.intel.com/en-us/articles/intel-performance-counter-monitor.

Michael J. McGrath is a senior researcher at Intel Labs Europe. He holds a PhD from Dublin City University and an MSc in Computing from ITB. Michael has been with Intel for 17 years, holding a variety of operational and research roles. Michael’s current research focus is on NFV Orchestration and edge based cloud computing. He is currently a researcher in the H2020 Superfluidity project focusing on the optimization of Virtualized Network Functions and applications over 5G Network/IT infrastructures. Previously Michael was the research lead for T-NOVA FP7 which focused on Virtualised Network Functions as a Service. Michael is also the research lead in the BT Intel Co-Lab based at Adastral Park in the UK which is focused on research relating to the deployment of Virtualised Network Functions in carrier grade network environments. Michael has co-authored more than 35 peer reviewed publications including two books.

Michael J. McGrath is a senior researcher at Intel Labs Europe. He holds a PhD from Dublin City University and an MSc in Computing from ITB. Michael has been with Intel for 17 years, holding a variety of operational and research roles. Michael’s current research focus is on NFV Orchestration and edge based cloud computing. He is currently a researcher in the H2020 Superfluidity project focusing on the optimization of Virtualized Network Functions and applications over 5G Network/IT infrastructures. Previously Michael was the research lead for T-NOVA FP7 which focused on Virtualised Network Functions as a Service. Michael is also the research lead in the BT Intel Co-Lab based at Adastral Park in the UK which is focused on research relating to the deployment of Virtualised Network Functions in carrier grade network environments. Michael has co-authored more than 35 peer reviewed publications including two books.

Dr. Victor Bayon-Molino is an applied researcher with Intel Labs Europe. His research focuses on developing advanced instrumentation, telemetry, monitoring and analytics systems to support novel compute, network and storage fabrics and their orchestration from enterprise and large-scale private data centers to public clouds.

Dr. Victor Bayon-Molino is an applied researcher with Intel Labs Europe. His research focuses on developing advanced instrumentation, telemetry, monitoring and analytics systems to support novel compute, network and storage fabrics and their orchestration from enterprise and large-scale private data centers to public clouds.

Editor:

Francesco Benedetto was born in Rome, Italy, on August 4th, 1977. He received the Dr. Eng. degree in Electronic Engineering from the University of ROMA TRE, Rome, Italy, in May 2002, and the PhD degree in Telecommunication Engineering from the University of ROMA TRE, Rome, Italy, in April 2007.

Francesco Benedetto was born in Rome, Italy, on August 4th, 1977. He received the Dr. Eng. degree in Electronic Engineering from the University of ROMA TRE, Rome, Italy, in May 2002, and the PhD degree in Telecommunication Engineering from the University of ROMA TRE, Rome, Italy, in April 2007.

In 2007, he was a research fellow of the Department of Applied Electronics of the Third University of Rome. Since 2008, he has been an Assistant Professor of Telecommunications at the Third University of Rome (2008-2012, Applied Electronics Dept.; 2013-Present, Economics Dept.), where he currently teaches the course of "Elements of Telecommunications" (formerly Signals and Telecommunications) in the Computer Engineering degree and the course of "Software Defined Radio" in the Laurea Magistralis in Information and Communication Technologies. Since the academic year 2013/2014, He is also in charge of the course of "Cognitive Communications" in the Ph.D. degree in Applied Electronics at the Department of Engineering, University of Roma Tre.

The research interests of Francesco Benedetto are in the field of software defined radio (SDR) and cognitive radio (CR) communications, signal processing for financial engineering, digital signal and image processing in telecommunications, code acquisition and synchronization for the 3G mobile communication systems and multimedia communication. In particular, he has published numerous research articles on SDR and CR communications, signal processing applied to financial engineering, multimedia communications and video coding, ground penetrating radar (GPR) signal processing, spread-spectrum code synchronization for 3G communication systems and satellite systems (GPS and GALILEO), correlation estimation and spectral analysis.

He is a Senior Member of the Institution of Electrical and Electronic Engineers (IEEE), and and a member of the following IEEE Societies: IEEE Standard Association, IEEE Young Professionals, IEEE Software Defined Networks, IEEE Communications, IEEE Signal Processing, IEEE Vehicular Technology. Finally, He is also a member of CNIT (Italian Inter-Universities Consortium for Telecommunications). He is the Chair of the IEEE 1900.1 WG on dynamic spectrum access, the Chair of the Int. Workshop on Signal Processing fo Secure Communciations (SP4SC), and the co-Chair of the WP 3.5 on signal processing for ground penetrating radar of the European Cost Action YU1208.

Subscribe to IEEE Softwarization

Join our free SDN Technical Community and receive IEEE Softwarization.

Article Contributions Welcomed

Download IEEE Softwarization Editorial Guidelines for Authors (PDF, 122 KB)

If you wish to have an article considered for publication, please contact the Managing Editor at sdn-editor@ieee.org.

Past Issues

IEEE Softwarization Editorial Board

Laurent Ciavaglia, Editor-in-Chief

Mohamed Faten Zhani, Managing Editor

TBD, Deputy Managing Editor

Syed Hassan Ahmed

Dr. J. Amudhavel

Francesco Benedetto

Korhan Cengiz

Noel Crespi

Neil Davies

Eliezer Dekel

Eileen Healy

Chris Hrivnak

Atta ur Rehman Khan

Marie-Paule Odini

Shashikant Patil

Kostas Pentikousis

Luca Prete

Muhammad Maaz Rehan

Mubashir Rehmani

Stefano Salsano

Elio Salvadori

Nadir Shah

Alexandros Stavdas

Jose Verger